Voting Classifier Machine Learning Mastery

Machine learning classifiers go beyond simple data mapping allowing users to constantly update models with new learning data and tailor them to changing needs. Not all classification predictive models support multi-class classification.

How To Master Python For Machine Learning From Scratch A Step By Step Tutorial By Shiv Bajpai Medium

One approach for using binary classification algorithms for multi-classification problems is to split the.

Voting classifier machine learning mastery. In this tutorial you discovered the essence of the stacked generalization approach to machine learning ensembles. Click the button below to get my free EBook and accelerate your next project and access to my exclusive email course. A voting classifier is an ensemble learning method and it is a kind of wrapper contains different machine learning classifiers to classify the data with combined voting.

Self-driving cars for example use classification algorithms to input image data to a category. Welcome to Machine Learning Mastery. In fact this is the explicit goal of the boosting class of ensemble learning algorithms.

Discover how to get better results faster. Send it To Me. Whether its a stop sign a pedestrian or another car constantly learning and.

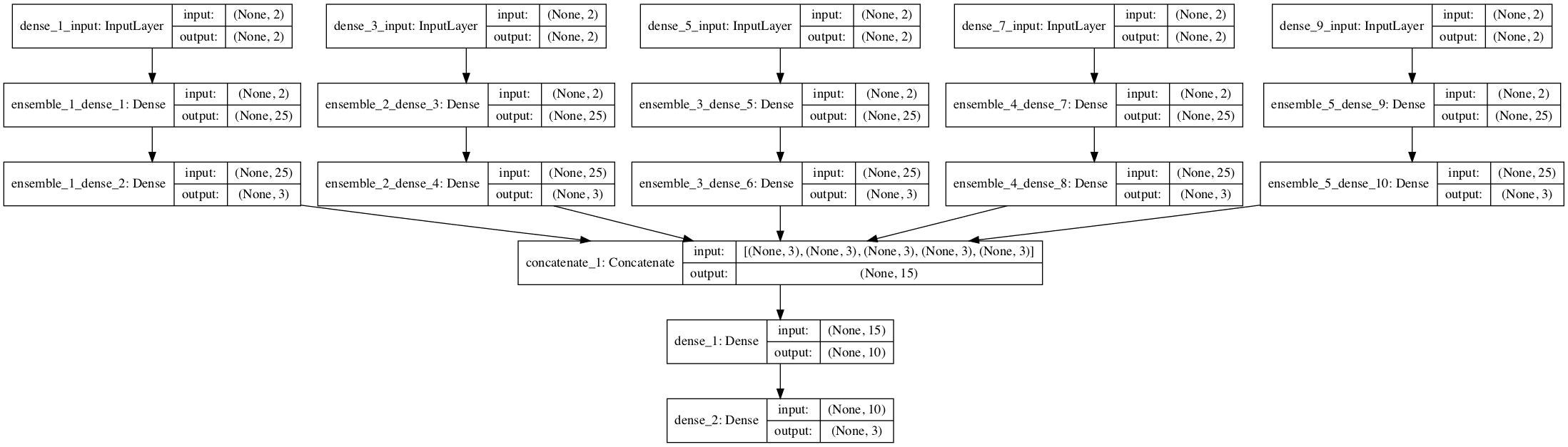

The VotingClassifier class can be used to combine the predictions from all of the models. There are hardmajority and soft voting methods to make a decision regarding the target class. The predictions by each model are considered as a vote.

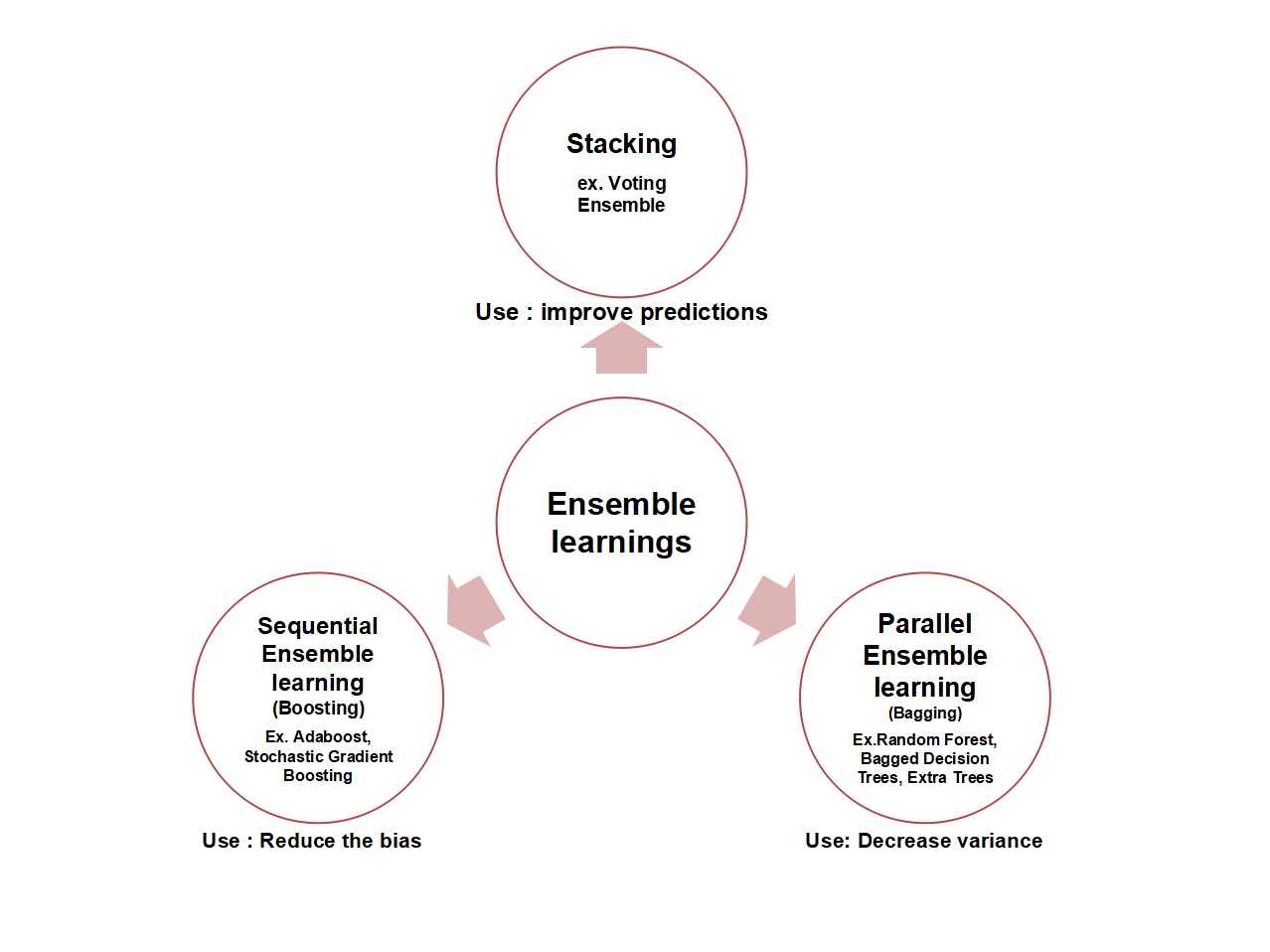

Ensemble Machine Learning in R. It is common to describe ensemble learning techniques in terms of weak and strong learners. Boosting Bagging and Stacking.

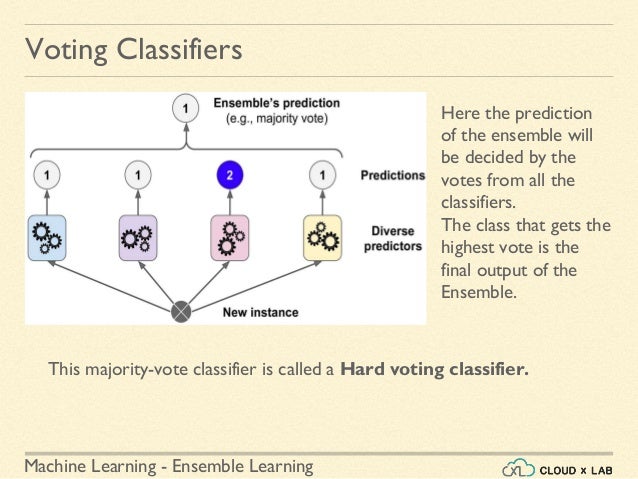

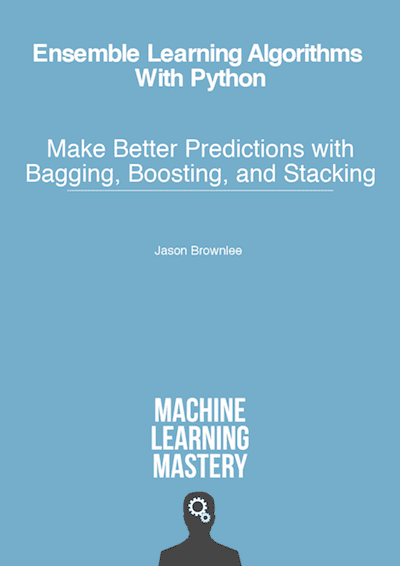

Kick-start your project with my new book Better Deep Learning including step-by-step tutorials and the Python source code files for all examples. How to develop a horizontal voting ensemble in Python using Keras to improve the performance of a final multilayer Perceptron model for multi-class classification. Similarly machine learning classification we can also use the panel voting method.

Build multiple models from different classification algorithms and use criteria to determine how the models best combine-Scikit-learn implements a voting classifier. Hard voting decides according to vote number which is the majority wins. In this technique multiple models are used to make predictions for each data point.

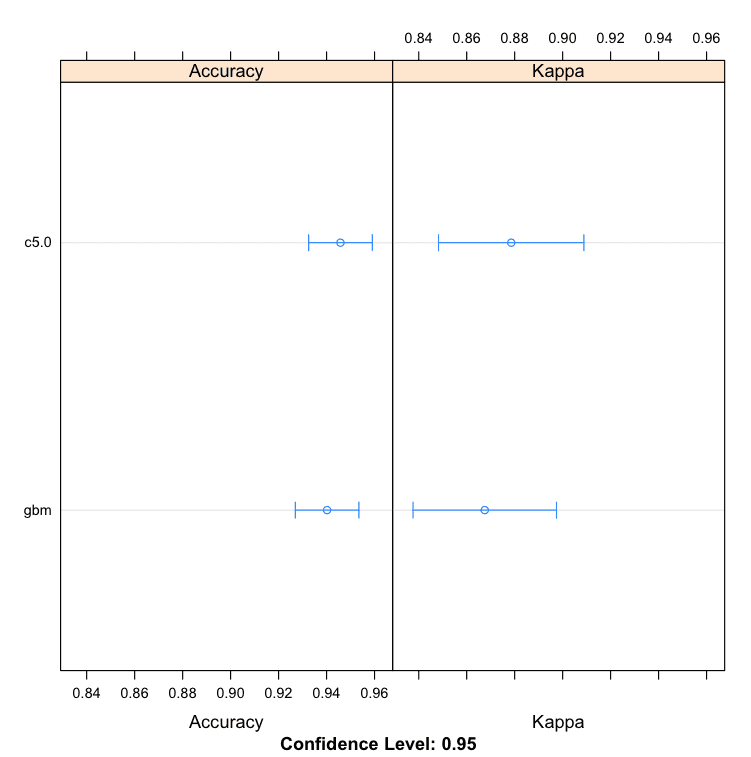

Before we start building ensembles lets define our test set-up. The predictions which we get from the. The stacking ensemble method for machine learning uses a meta-model to combine predictions from contributing members.

Practical Machine Learning Tools and Techniques 2016. Although we may describe models as weak or strong generally the terms have a specific. The voting classifier like any other machine learning algorithm is used to fit the independent variables of the training dataset with the dependent variables from sklearndatasets import load_iris from sklearnmodel_selection import train_test_split iris.

It simply aggregates the findings of each classifier passed into Voting Classifier and predicts the output class based on the highest majority of voting. A Voting Classifier is a machine learning model that trains on an ensemble of numerous models and predicts an output class based on their highest probability of chosen class as the output. There are three main techniques that you can create an ensemble of machine learning algorithms in R.

Hi Im Jason Brownlee PhD and I help developers like you skip years ahead. Algorithms such as the Perceptron Logistic Regression and Support Vector Machines were designed for binary classification and do not natively support classification tasks with more than two classes. You can create ensembles of machine learning algorithms in R.

In this section we will look at each in turn. In other words a very simple way to create an even batter classifier. The max voting method is generally used for classification problems.

For example we may desire to construct a strong learner from the predictions of many weak learners. This class takes an estimators argument that is a list of tuples where each tuple has a name and the model or modeling pipeline.

Ensemble Learning Techniques Tutorial Kaggle

Evaluation Metrics For Imbalanced Classification Data Science And Machine Learning Kaggle

Machine Learning Mastery With Weka Machine Learning Statistical Classification

Ensemble Learning Techniques Tutorial Kaggle

![]()

A Gentle Introduction To The Gradient Boosting Algorithm For Machine Learning

A New Machine Learning Ensemble Model For Class Imbalance Problem Of Screening Enhanced Oil Recovery Methods Sciencedirect

Stacking Ensemble For Deep Learning Neural Networks In Python

How To Use Ensemble Machine Learning Algorithms In Weka Get Certified

Machine Learning Mastery Ensemble Learning Algorithms With Python Make Better Predictions

Machine Learning Mastery With Weka Machine Learning Statistical Classification

Ensemble Learning The Heart Of Machine Learning By Ashish Patel Ml Research Lab Medium

How To Build An Ensemble Of Machine Learning Algorithms In R

A New Machine Learning Ensemble Model For Class Imbalance Problem Of Screening Enhanced Oil Recovery Methods Sciencedirect

December 2016 Deep Learning Garden

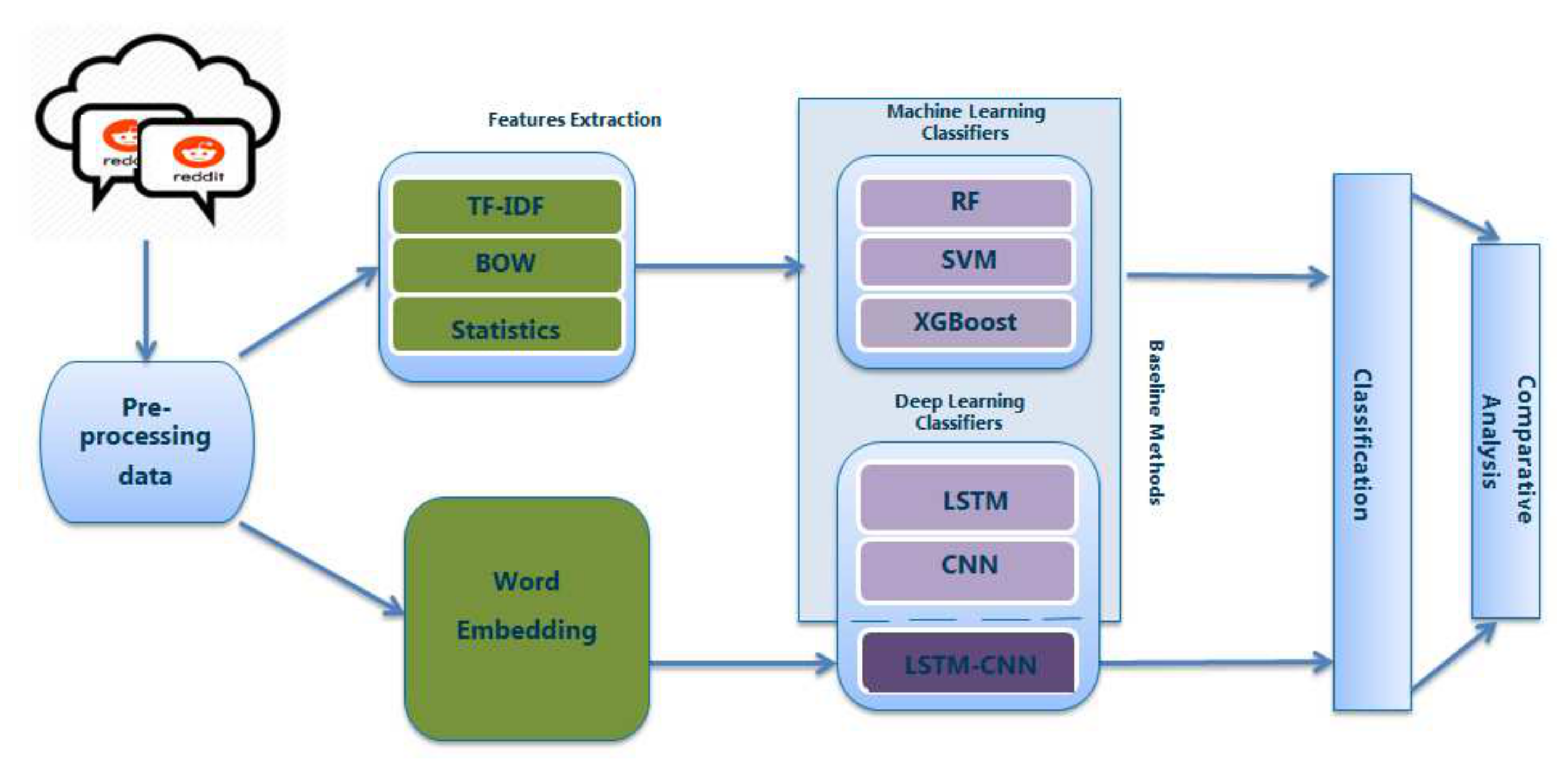

Algorithms Free Full Text Detection Of Suicide Ideation In Social Media Forums Using Deep Learning Html

A New Machine Learning Ensemble Model For Class Imbalance Problem Of Screening Enhanced Oil Recovery Methods Sciencedirect

Ensemble Learning Techniques Tutorial Kaggle

Diabetes Mellitus Prediction Using Ensemble Machine Learning Techniques Springerlink

Ensemble Learning Techniques Tutorial Kaggle

Post a Comment for "Voting Classifier Machine Learning Mastery"